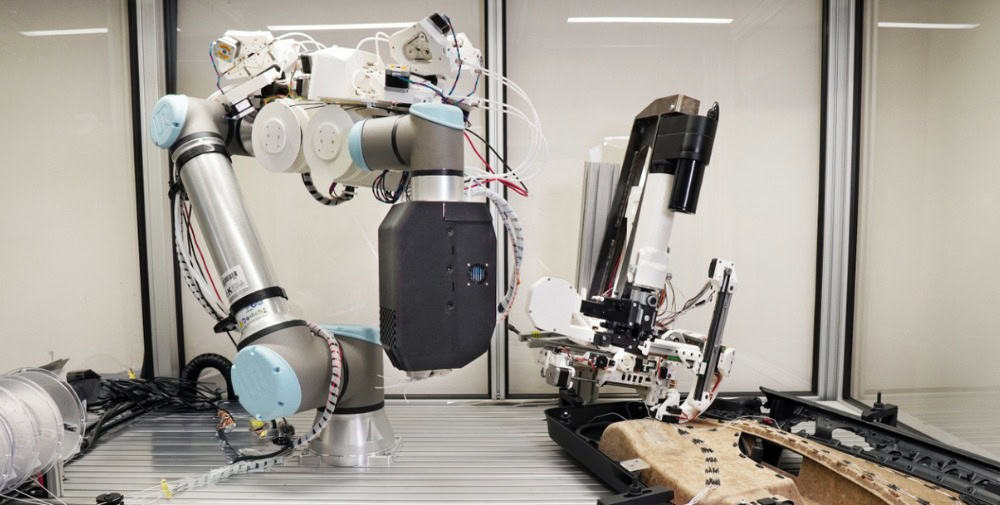

1. Scanning the Object with the Robot’s Camera

The process begins with the robot utilizing its integrated camera system to scan the target object. The robot captures high-resolution images and possibly other sensory data to create a detailed digital representation of the object. This initial scan gathers essential information about the object’s size, shape, texture, and other physical properties, which are critical for accurate interaction.

2. Placing the Object on a Surface

After the initial scanning, the object is placed on a specific surface within the robot’s operating environment. Positioning the object on a surface allows the robot to understand the context of the object’s placement relative to its surroundings. This step is crucial for tasks that involve picking up or manipulating the object from a particular location.

3. Secondary Scanning by the Robot

With the object positioned, the robot performs a secondary scan to update its understanding of the object’s location and orientation in the environment. This scan ensures that any changes in the object’s position are accounted for, providing the robot with real-time data necessary for precise interaction.

4. Demonstration Through Hand Interaction and 3D Data Recording

A human operator demonstrates the desired task by interacting with the object manually, such as picking it up, moving it, or performing a specific manipulation. During this demonstration, the robot records the entire process using its camera system. Advanced software converts the recorded visual data into three-dimensional (3D) data models, capturing the nuances of the movement, including hand positioning and motion trajectories. This 3D data serves as a detailed template for the robot to learn the task.

5. Utilizing Data in the Omniverse for 3D Scene Generation and Robot Path Practice

The collected 3D data is then imported into a virtual simulation environment, often referred to as the “Omniverse.” Within this virtual space, a 3D scene replicating the real-world environment and the demonstrated task is generated. The robot uses this simulation to practice the task repeatedly, refining its movements and interactions. Machine learning algorithms analyze the robot’s performance, allowing it to optimize its path and actions. The robot continues this iterative process in the virtual environment until it can accurately replicate the demonstrated movement, including grasping and manipulating the object as intended.

6. Transferring the Trained Digital Twin Model to the Robot for Execution

Once the robot has successfully learned and practiced the task in the virtual environment, the trained digital twin model—which encompasses all the learned behaviors and movement patterns—is transferred back to the physical robot. This model update equips the robot with the necessary instructions to perform the task in the real world. The robot then executes the movement autonomously, applying the precise motions and interactions it mastered during the virtual simulations.

Conclusion

The Visio-to-Teach technology streamlines the process of programming robots by replacing traditional coding with visual demonstrations and simulation-based learning. By observing human actions and practicing in a virtual environment, robots can quickly learn complex tasks with high precision. This technology enhances the robot’s adaptability and efficiency, making it a powerful tool in various industries where robotic automation is essential.