The rise of SpiNNaker2 as an event-based platform for hybrid AI

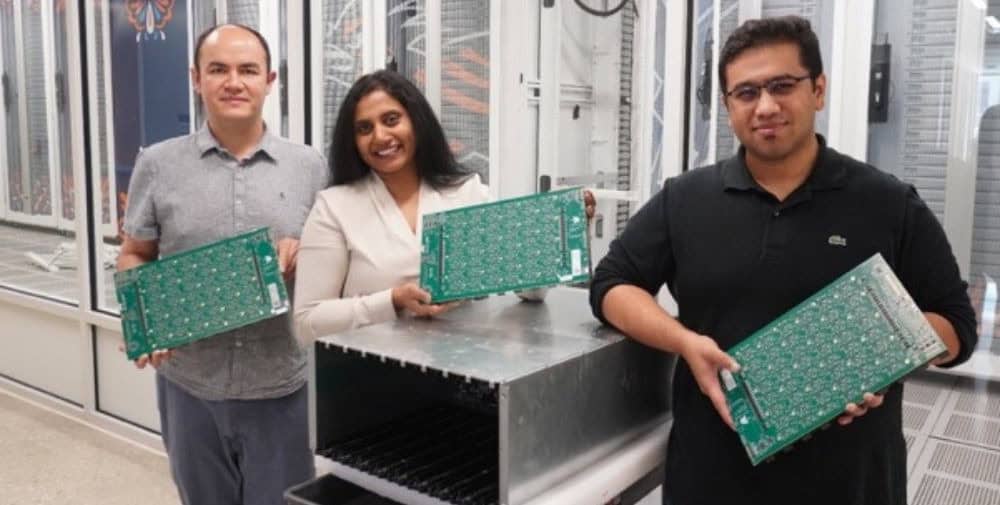

Fast forward to 2024, where we at SpiNNcloud Systems in Dresden, Germany, are today publicly unveiling the next iteration of this technology. The SpiNNaker2 system, developed primarily at the chair of Prof. Dr. Christian Mayr at the Technical University of Dresden, has evolved from its predecessor into an event-based platform for AI while significantly improving all of the original features.

The technology we are introducing today provides a way to overcome the inherent limitations of traditional AI models. Current models, while powerful and ubiquitous in our lives, suffer from transparency issues, lack explainability, require huge amounts of training data and, as Steve Furber mentions in the video, consume a ridiculous amount of energy. These problems stem from the limitations of the three main types of AI:

1) Deep neural networks (also known as connectionist or statistical approaches): They have shown amazing practical applications by correctly handling very large data sets and complex computations. However, they are often not explainable and also do not offer a generalizable alternative to extrapolate knowledge from different contexts, and their size also makes them extremely inefficient.

2) Symbolic AI/expert systems: They offer excellent explainability and work according to strict rules, making them very reliable, comprehensible and explainable. However, they rely on experts to encode their knowledge and adapt poorly to new situations as they are often not confronted with the huge datasets of statistical approaches.

3) Neuromorphic models: These brain-inspired neural networks use some of the most fascinating principles of the human brain, e.g. energy efficiency, event-based operation and the highly parallel approach. However, they approach the process of brain modeling with a detailed bottom-up approach that often leads to a strong simplification of cognitive processes.

To address these challenges, hybrid AI models combine the strengths of these systems to improve their robustness, scalability, practicality and energy efficiency. This advanced combination of different AI principles is often referred to as the “third wave of AI”, a term coined by DARPA to describe systems that can understand contextual nuances and adapt dynamically. The heterogeneity of these models has an extremely high chance of bridging the gap between human-like reasoning and machine efficiency, paving the way for general-purpose AI with an acceptable error rate.

So what does this have to do with hardware, or more specifically, SpiNNaker2? Well, it turns out that GPUs and the new wave of industrial approaches to accelerating deep neural networks have focused heavily on developing efficient machines to compute the numbers needed in these networks, leaving no room for rule-based programmability associated with these accelerators. Furthermore, this new wave of accelerators is still being developed with the synchronous primitives inherited from GPUs, which prevents them from being as efficient as our brains. A hardware platform that enables rule-based machines to accelerate deep neural networks while operating efficiently like our brains therefore has the potential to revolutionize the large-scale implementation of these robust models. One particular algorithm, NARS-GPT, which has outperformed GPT-4 on reasoning tasks, uses a neurosymbolic engine that is nearly impossible to parallelize in an HPC system based on GPUs or other dataflow-driven hardware. NARS, short for Non-Axiomatic Reasoning System, uses Deep Neural Networks to extract features from the environment, which are then fed to a knowledge graph built using a rule-based (or symbolic) approach. To the best of our knowledge and that of the authors of these neurosymbolic reasoners, hybrid hardware is the only way to scale and efficiently deploy these models. These functions offer the potential to integrate learning, reasoning and efficient processing not only at the chip level, but also at the system level.

The future of HPC with SpiNNaker2

As already indicated, SpiNNaker2, the main building block of our solution, is specifically designed to meet these demanding requirements. Each chip is a low-power network of 152 ARM-based cores that enhances the parallel processing capabilities critical to managing complex, dynamic data typical of hybrid AI systems. Its architecture supports high-speed, energy-proportional interconnects that are essential for scaling and communicating across multiple chips, increasing capacity for large-scale, distributed neural network deployment. The chip also includes native accelerators specifically designed for neuromorphic computing tasks such as exponential and logarithmic functions and true random number generation, as well as custom accelerators for efficient machine learning computations.

This scalability is enabled by the chip’s lightweight and event-based network-on-chip architecture, which supports fast and efficient data transfer throughout the system. This is critical for tasks that require real-time processing across many nodes, such as complex AI computations and large-scale neural simulations. In addition, the globally asynchronous and locally synchronous (GALS) architecture allows each part of the chip to operate in an interrupt-driven approach, significantly reducing bottlenecks and improving overall system performance at scale. In addition, native support for dynamic voltage frequency scaling at the core level contributes to a scalable power management approach that adjusts power as needed. This feature helps to maintain the efficiency of the system at all levels of abstraction.

As a neuromorphic supercomputer, the SpiNNcloud platform remains a highly competitive platform that achieves scalability (i.e. the number of neurons) that is not possible for any other system in the world today. The SpiNNcloud platform provided in Dresden has the capacity to emulate at least 5 billion neurons. In addition, the SpiNNaker2 architecture is characterized by its flexibility, allowing not only the native implementation of deep neural networks, symbolic models or spiking neural networks, but pretty much any other computation that can be represented in a computational graph. With this comprehensive scalability, the SpiNNcloud platform is designed to reach supercomputing performance levels while maintaining high peak efficiency and reliability. This makes it suitable for tackling demanding AI challenges such as those that lie ahead in the third wave of AI.

Steve Furber once told me in an interview that while Arm technology has had a significant impact, he sees the SpiNNaker invention as a more disruptive and fundamental contribution because it aims to unlock practical inspiration from the secrets of the human brain.

– – – – – –

Further links

👉 https://spinncloud.com

Photo: pixabay