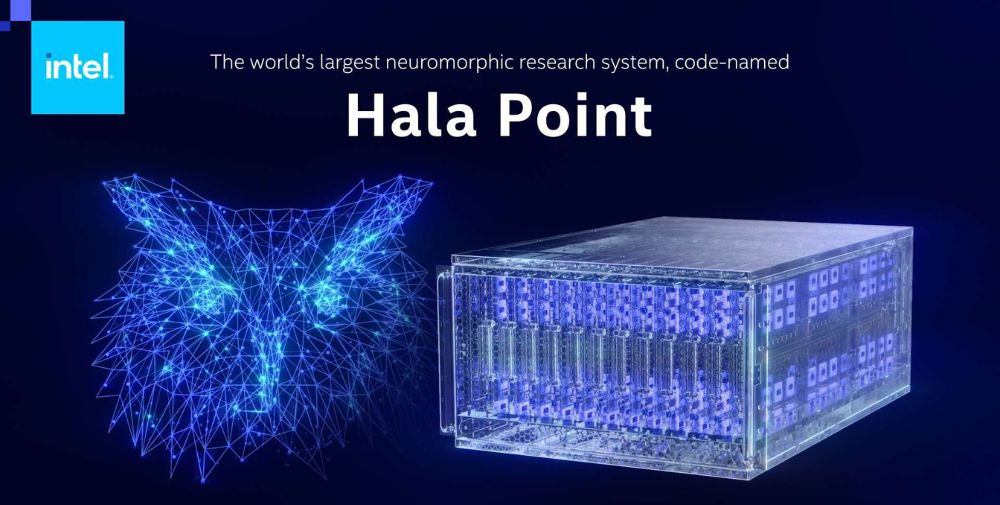

What it does: Hala Point is the first large-scale neuromorphic system to demonstrate state-of-the-art computational efficiency on mainstream AI workloads. Characterization shows that it can support up to 20 quadrillion operations per second, or 20 petaops, with an efficiency of more than 15 trillion 8-bit operations per second per watt (TOPS/W) when running conventional deep neural networks. This exceeds the level of architectures based on graphics processing units (GPU) and central processing units (CPU). Hala Point’s unique capabilities could enable real-time continuous learning for AI applications such as scientific and engineering problem solving, logistics, smart city infrastructure management, large language models (LLMs) and AI agents in the future.

How it’s used: Sandia National Laboratories researchers plan to use Hala Point for advanced brain-scale computing research. The organization will focus on solving scientific computational problems in the areas of device physics, computer architecture, computer science and informatics.

“Working with Hala Point enhances our Sandia team’s ability to solve computational and scientific modeling problems. Research with a system of this size will allow us to keep pace with the evolution of artificial intelligence in areas ranging from business to defense to basic research,” said Craig Vineyard, Hala Point team leader at Sandia National Laboratories.

Currently, Hala Point is a research prototype that will enhance the capabilities of future commercial systems. Intel anticipates that these findings will lead to practical advances, such as the ability of LLMs to continuously learn from new data. Such advances promise to significantly reduce the prohibitive training overhead in widespread AI deployments.

Why this matters: Recent trends in scaling deep learning models to trillions of parameters have highlighted the daunting challenges to AI sustainability and the need for innovation at the lowest levels of hardware architecture. Neuromorphic computing is a fundamentally new approach that draws on insights from neuroscience and integrates memory and computing power with highly granular parallelism to minimize data movement. In results published this month at the International Conference on Acoustics, Speech, and Signal Processing (ICASSP), Loihi 2 is shown to increase the efficiency, speed, and adaptability of emerging small edge workloads1 by orders of magnitude.

Hala Point is an evolution of its predecessor Pohoiki Springs with numerous improvements and now brings neuromorphic performance and efficiency gains to traditional deep learning models, especially for processing real-time workloads such as video, speech, and wireless communications. For example, Ericsson Research is using Loihi 2 to optimize the efficiency of telecom infrastructure, as demonstrated at this year’s Mobile World Congress.

About Hala Point:The Loihi 2 neuromorphic processors that form the basis for Hala Point leverage brain-inspired computing principles such as asynchronous event-based neural networks (SNNs), integrated memory and computing power, and sparse and ever-changing connections to increase energy consumption and performance by orders of magnitude. The neurons communicate directly with each other rather than through memory, reducing overall power consumption.

Hala Point packs 1,152 Loihi 2 processors, manufactured on Intel 4 process nodes, into a data center chassis with six racks the size of a microwave oven. The system supports up to 1.15 billion neurons and 128 billion synapses distributed across 140,544 neuromorphic cores and consumes a maximum of 2,600 watts of power. It also includes over 2,300 embedded x86 processors for additional computation.

Hala Point integrates processing, memory and communication channels in a massively parallelized structure that provides a total memory bandwidth of 16 petabytes per second (PB/s), a communication bandwidth of 3.5 PB/s between cores and a communication bandwidth of 5 terabytes per second (TB/s) between chips. The system can process over 380 trillion 8-bit synapses and over 240 trillion neuron operations per second.

Applying bio-inspired spiking neural network models, the system can execute its full capacity of 1.15 billion neurons 20 times faster than a human brain and up to 200 times faster at lower capacity. While Hala Point is not intended for neuroscience modeling, its neuron capacity is roughly equivalent to that of an owl brain or the cortex of a capuchin monkey.

Loihi-based systems can perform AI inference and solve optimization problems using 100 times less energy and up to 50 times faster than traditional CPU and GPU architectures1. By exploiting sparse connectivity of up to 10:1 and event-driven activity, initial results on Hala Point show that the system can achieve up to 15 TOPS/W2 efficiency for deep neural networks without the need to collect input data in batches – a common optimization for GPUs that significantly delays the processing of data arriving in real-time, such as video from cameras. Future neuromorphic LLMs capable of continuous learning, while still in the research phase, could enable gigawatt-hour energy savings by eliminating the need for regular retraining with ever-growing data sets.

What’s next? The delivery of Hala Point to Sandia National Labs marks the first large-scale deployment of a new family of neuromorphic research systems that Intel plans to share with its research colleagues. Further development will enable neuromorphic computing applications to overcome performance and latency constraints that limit the deployment of AI capabilities in the real world and in real time.

Together with an ecosystem of more than 200 members of the Intel Neuromorphic Research Community (INRC), including leading academic groups, government labs, research institutions and companies worldwide, Intel is working to push the boundaries of brain-inspired AI and advance this technology from research prototypes to industry-leading commercial products in the coming years.

1 See “Efficient Video and Audio Processing with Loihi 2”, International Conference on Acoustics, Speech, and Signal Processing, April 2024, and “Advancing Neuromorphic Computing with Loihi: Survey of Results and Outlook”, Proceedings of the IEEE, 2021.

2 Characterization was performed using a multilayer perceptron (MLP) network with 14,784 layers, 2048 neurons per layer, and 8-bit weights stimulated with random noise. The Hala Point implementation of the MLP network was pruned to a 10:1 representation with sigma-delta neuron models providing activation rates of 10 percent. Results at the time of testing in April 2024. Results may vary.

– – – – –

Further links

👉 www.intel.com

Photo: Intel