“As organizations implement ever-larger AI models and data sets, it’s critical that the accelerators within the system can handle compute-intensive workloads with high performance and flexibility to scale,” said Philip Guido, executive vice president and chief commercial officer, AMD. “AMD Instinct Accelerators combined with AMD ROCm software provide comprehensive support, including IBM watsonx AI, Red Hat Enterprise Linux AI and Red Hat OpenShift AI platforms, to build leading frameworks with these powerful open ecosystem tools. Our collaboration with IBM Cloud will aim to enable customers to run and scale Gen AI inferencing without sacrificing cost, performance or efficiency.”

“AMD and IBM Cloud share the same vision of bringing AI to the enterprise. We are committed to making the power of AI accessible to enterprise customers, helping them prioritize their outcomes and ensuring they are in control of their AI deployment,” said Alan Peacock, General Manager of IBM Cloud. “By leveraging AMD’s Accelerators in the IBM Cloud, our enterprise clients will have another option to scale to meet their AI needs while helping them optimize cost and performance.”

IBM and AMD are working together to deliver MI300X Accelerators as a service in the IBM Cloud to help enterprise clients leverage AI. To help enterprise customers across multiple industries, including highly regulated ones, IBM and AMD intend to fully leverage the security and compliance capabilities of the IBM Cloud.

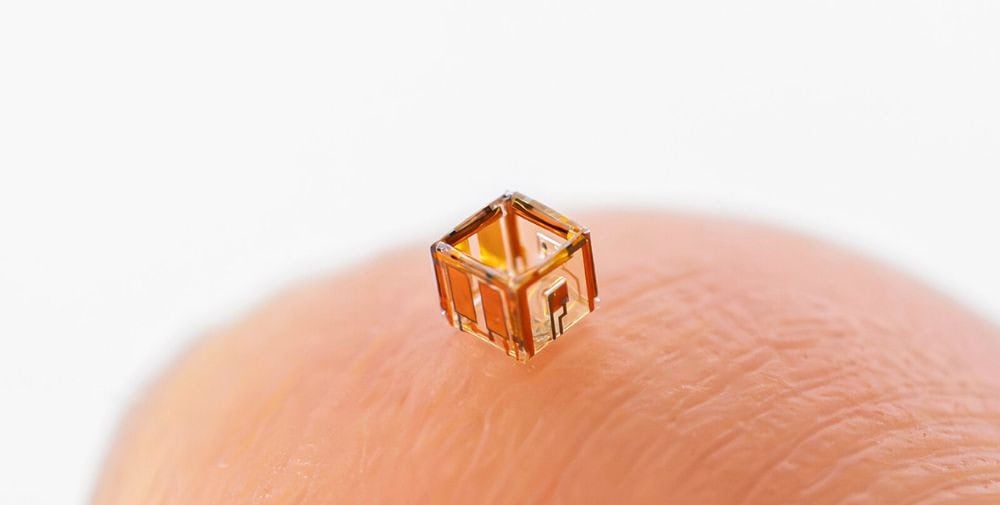

- Support for Large Model Inferencing: Equipped with 192 GB of High Bandwidth Memory (HBM3), AMD Instinct MI300X Accelerators provide support for large model inferencing and fine tuning. The large memory capacity can also help customers run larger models with fewer GPUs, potentially lowering the cost of inferencing.

- Improved performance and security: Deploying AMD Instinct MI300X Accelerators as a service on IBM Cloud Virtual Servers for VPC and supporting containers with IBM Cloud Kubernetes Service and IBM Red Hat OpenShift on IBM Cloud can help optimize the performance of on-premises AI applications.

For generative AI inferencing workloads, IBM plans to enable support for AMD Instinct MI300X Accelerators in IBM’s watsonx AI and data platform and provide watsonx customers with additional AI infrastructure resources to scale their AI workloads in hybrid cloud environments. In addition, the Red Hat Enterprise Linux AI and Red Hat OpenShift AI platforms can run Granite-family Large Language Models (LLMs) with alignment tools using InstructLab on MI300X Accelerators.

IBM Cloud with AMD Instinct MI300X Accelerators is expected to be generally available in the first half of 2025. Stay tuned for more updates from AMD and IBM in the coming months.

Statements regarding IBM’s future direction and intent are subject to change or withdrawal without notice and represent goals and intentions only.

– – – – –

Further links

👉 www.ibm.com

👉 Information on the GPU and Accelerator offerings

Photo: IBM